PCIe Gen5 + CXL Data Flow: Visualizing the Future of System Architecture

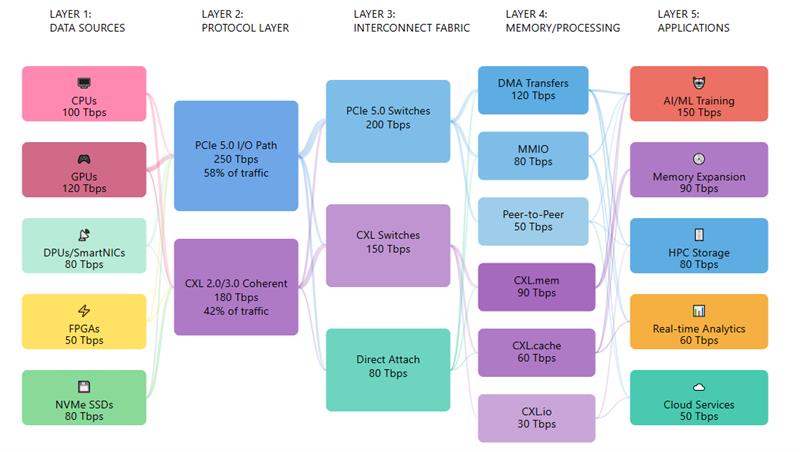

This Sankey diagram illustrates the complete journey of 430 Tbps of traffic across compute, interconnect, memory, and application layers showing why PCIe and CXL must be designed together.

PCIe Gen5 + CXL Data Flow: Visualizing the Future of System Architecture

🔷Understanding how data and memory move through modern platforms is key to building scalable

AI, cloud, and high-performance systems.

•This Sankey diagram illustrates the complete journey of 430 Tbps of traffic across compute, interconnect, memory, and application layers showing

why PCIe and CXL must be designed together.

🔷The Flow Architecture:

Starting with CPUs, GPUs, DPUs, FPGAs, and NVMe SSDs generating 430 Tbps of data, traffic

enters the protocol layer where a critical split occurs.

• PCIe Gen5 carries 250 Tbps (58%) of traditional I/O traffic

• CXL 2.0/3.0 handles 180 Tbps (42%) of cache-coherent memory traffic

This split defines the evolution from device-centric I/O to memory-centric system design.

🔷Interconnect Fabric Enables Scale:

PCIe Gen5 switches, CXL switches, and direct-attach links form the backbone of the platform.

• PCIe focuses on high-throughput DMA, MMIO, and peer-to-peer transfers

• CXL introduces memory pooling and coherency across devices enabling disaggregated and composable systems.

🔷Memory and Processing in Action:

• PCIe paths serve storage, networking, and accelerator data movement.

• In parallel, CXL.mem and CXL.cache enable 90 Tbps of memory expansion and 60 Tbps of cache-coherent access, reducing DRAM pressure while maintaining low latency for compute-intensive workloads.

🔷From Interconnect to Applications:

• AI/ML training consumes 150 Tbps by combining PCIe-attached GPUs with CXL-based memory expansion.

• High-performance storage, real-time analytics, and cloud services are dynamically served across both paths, depending on latency and coherency requirements.

🔷The Key Insight:

• PCIe moves data fast.

• CXL changes how memory is shared.

With 42% of system traffic leveraging CXL, modern platforms shift from fixed,socket-bound memory to flexible, pooled memory architectures.

This transformation not just higher bandwidth is what defines next generation AI and cloud systems.

By Krishnan Babu

Technology Specialist

Embedded

No comments yet. Login to start a new discussion Start a new discussion